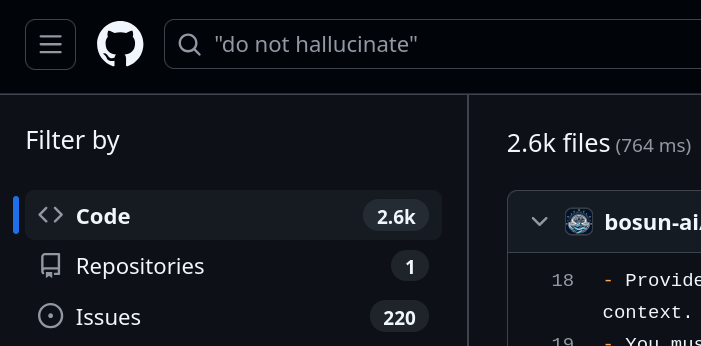

Vibe coders frustration can be seen in GitHub search

“Wait.. I know how to fix this..”

- frustrated vibe coders

LLMs have made coding more accessible, but this doesn’t mean you don’t need to learn software development. These techniques can slightly improve the outputs of LLMs, but it doesn’t ‘know’ anything. Asking it “do not hallucinate” doesn’t make it follow those instructions, it changes the weights of the token output. It is lossy compression with some targeted reinforcement learning to act like a chat bot given input. “Hallucination” is just things we don’t like. They are the weeds of the garden of text output. What a weed is depends on the gardener and what they want to grow, and what they don’t want to grow.

These companies are selling you something, and the the vast majority of tech media have just been their PR vehicle rather than actually ask difficult questions.

Neural networks are useful for certain types of tasks, and this AI hype cycle has at least taught me that they can be useful for a range of well defined use cases. If I was a betting person, I would bet on small use case targeted models to win the majority of the economic value, not giant LLMs. LLMs can assist with learning the language of topics you are not familiar with, but you still have to do the understanding. Learning PyTorch for example, and how these things are put together is a much better use of effort and time than endlessly tweaking your prompt.

If you want some honest investigation into the current AI hype cycle, check out Ed Zitron’s Better Offline podcast